Learn how to Deal With A Really Bad Deepseek

작성자 정보

- Josefa 작성

- 작성일

본문

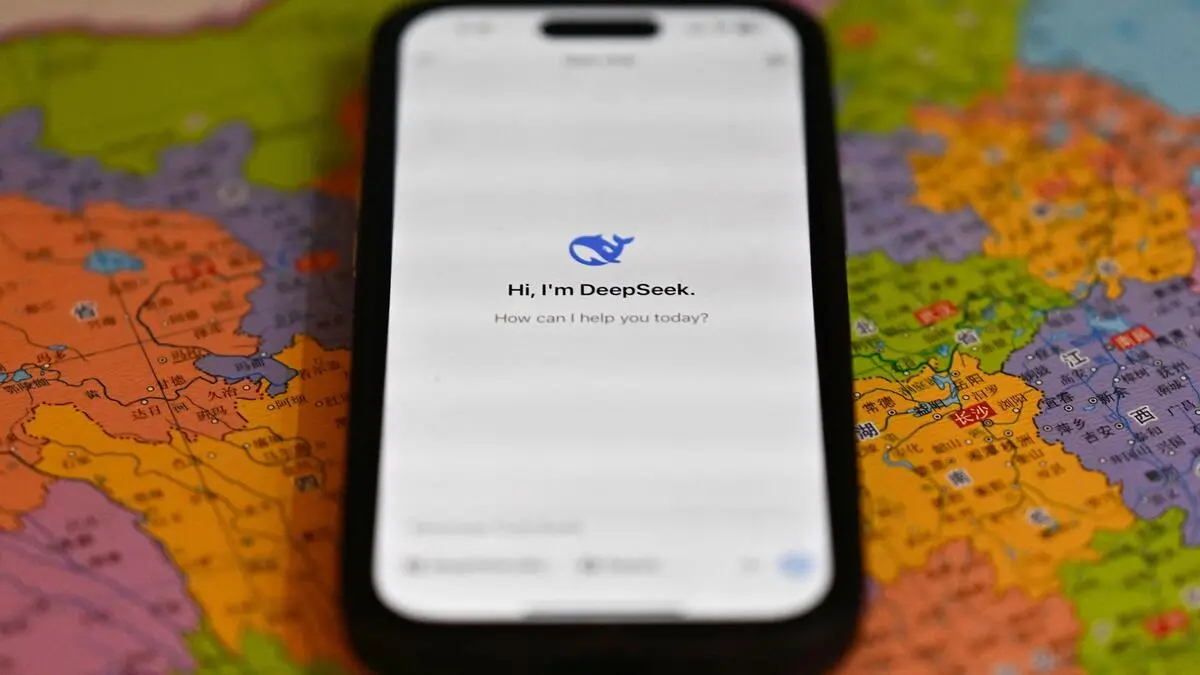

DeepSeek has already endured some "malicious attacks" resulting in service outages that have forced it to limit who can sign up. These benefits can lead to better outcomes for patients who can afford to pay for them. It’s straightforward to see the combination of methods that lead to massive efficiency good points compared with naive baselines. They have been also inquisitive about monitoring fans and other events planning large gatherings with the potential to show into violent occasions, such as riots and hooliganism. The licensing restrictions reflect a rising consciousness of the potential misuse of AI technologies. The model is open-sourced below a variation of the MIT License, allowing for commercial usage with particular restrictions. A revolutionary AI model for performing digital conversations. Nous-Hermes-Llama2-13b is a state-of-the-artwork language model positive-tuned on over 300,000 directions. The model excels in delivering correct and contextually relevant responses, making it ultimate for a wide range of applications, including chatbots, language translation, content material creation, and more. Enhanced Code Editing: The mannequin's code editing functionalities have been improved, enabling it to refine and improve present code, deep seek making it extra efficient, readable, and maintainable.

In case you have a lot of money and you've got a lot of GPUs, you'll be able to go to the most effective individuals and say, "Hey, why would you go work at an organization that basically can't provde the infrastructure you'll want to do the work you want to do? You see a company - individuals leaving to begin these sorts of corporations - however outdoors of that it’s hard to convince founders to leave. It’s non-trivial to grasp all these required capabilities even for people, not to mention language models. AI Models with the ability to generate code unlocks all kinds of use cases. There’s now an open weight mannequin floating around the web which you can use to bootstrap every other sufficiently powerful base mannequin into being an AI reasoner. Our remaining solutions had been derived through a weighted majority voting system, which consists of generating a number of options with a coverage mannequin, assigning a weight to every resolution using a reward model, and then choosing the reply with the best total weight. Our remaining options have been derived by way of a weighted majority voting system, where the solutions were generated by the policy mannequin and the weights were determined by the scores from the reward model.

The original V1 model was skilled from scratch on 2T tokens, with a composition of 87% code and 13% pure language in both English and Chinese. DeepSeek Coder is a succesful coding mannequin trained on two trillion code and natural language tokens. This strategy combines pure language reasoning with program-based downside-solving. The Artificial Intelligence Mathematical Olympiad (AIMO) Prize, initiated by XTX Markets, is a pioneering competition designed to revolutionize AI’s role in mathematical problem-fixing. Recently, our CMU-MATH group proudly clinched 2nd place within the Artificial Intelligence Mathematical Olympiad (AIMO) out of 1,161 collaborating teams, earning a prize of ! It pushes the boundaries of AI by solving complex mathematical problems akin to these in the International Mathematical Olympiad (IMO). The primary of these was a Kaggle competition, with the 50 test issues hidden from rivals. Unlike most teams that relied on a single model for the competition, we utilized a twin-mannequin approach. This mannequin was high-quality-tuned by Nous Research, with Teknium and Emozilla main the nice tuning course of and dataset curation, Redmond AI sponsoring the compute, and a number of other different contributors. Hermes 2 Pro is an upgraded, retrained version of Nous Hermes 2, consisting of an updated and cleaned model of the OpenHermes 2.5 Dataset, as well as a newly introduced Function Calling and JSON Mode dataset developed in-home.

관련자료

-

이전

-

다음